Best Optics For Superior Space Exploration

At one point in our lives, we all gazed up at the dark, starry, and beautiful emptiness we know as space, wondering what lies beyond what our naked eyes can see. Exploring the unknown has always attracted our attention as a species, and space exploration isn’t an exemption.

Current technology allows us to observe distant planets, asteroids, natural satellites, and comets millions of miles away. And this is possible because of the lenses fitted on the artificial satellites, sending images to us. This article explores astrophotography, smallsat imagers, and the role optics have played in recent space exploration.

Brief History of Human Space Journey and Astrophotography

Today space exploration is closely related to astrophotography. Pictures and astronomy have developed a relationship over time, and space images have become the primary way we see, hear and connect with the universe.

In 1946, we took the first picture of earth from a Russian rocket. It was the first of many to come from Earth and space. In 1957, the Soviet Union put the first human-made satellite in earth’s orbit. Since then, we have launched over 5000 plus artificial satellites with over eighty countries owning them.

Smallsat imagers are not only in our earth’s orbit today but also in our solar system. The crucial point to note is our history is filled with us building space satellites and equipping them with state-of-the-art cameras.

In the 1960s and 70s, we sent more satellites to the Moon and Venus, and today we are doing this more. And, in turn, enhanced our understanding of space. In the next section, we will look at the best optics for space and how they function.

Satellites and Cameras

We have looked at a brief history in the first section, and in this section, we go deep into our topic. Let’s quickly define two terms.

What is a Satellite?

A satellite is a natural or artificial object that orbits a larger object in space.

What is a Spacecraft?

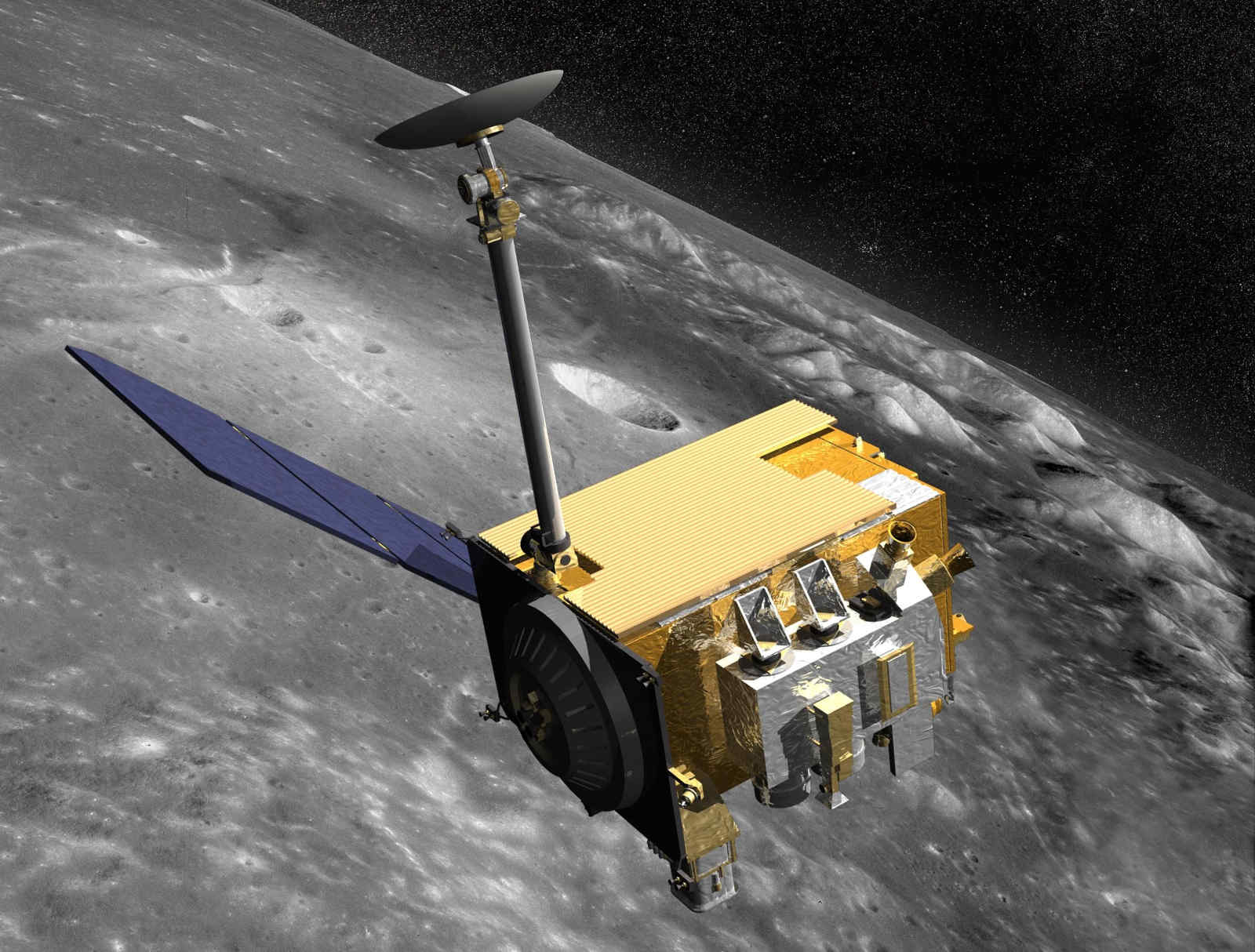

It is a vehicle used to travel in space. Spacecraft are classified based on what role they perform. The important ones for the article are Penetrator, Lander, and Rover spacecraft, all known for their exploration.

Now that those two terms are clear, we move on to why we needed their definition in the first place. Therefore, what is a satellite camera?

To get the pictures and images, we fit cameras on satellites and spacecraft explorers. But these space cameras cope under harsh conditions to produce their result.

The imaging devices use their state-of-the-art cameras to take images to detect distant objects, carry out photogrammetric modeling, spectral mapping and observe the climate of planets. All these functions point to a camera with high computing power. We will therefore look at the features a satellite camera should possess.

They include:

- Small and lightweight. This is because every weight counts when in space. The lighter, the better.

- Radiation. The imagery device must be sturdy enough to withstand the different cosmic rays it will experience.

- Spectral Range. The device should have a suitable spectral range. Meaning it should be able to sense high and low-frequency light (From microwaves to ultraviolet rays) to produce the best results.

- Signal-to-Noise Ratio. Ideally, a high SNR is what we want from our le. A low SNR will mean noise has corrupted the signal; here, the Images.

Now, how does a satellite camera work? All these features are critical for the successful capture of any image from the imaging device. The type of camera fitted on spacecraft and satellites revolves around the kind of mission the spacecraft is undertaking.

In terms of satellite cameras Earth uses, it also depends on its purpose. The Governments of different countries are strict on the use of cameras. A military-grade satellite will have more camera power compared to weather satellites. The regulations are rigid to protect human privacy.

The technology that has made all of this possible is in the sensors of the lens on the camera. And in the last part of this article, we will discuss them.

Before we get to that, know that the satellite camera types mainly revolve around resolution when discussing imagery in remote sensing—spatial, temporal, spectral, geometric, and radiometric.

Camera Sensors

In the past, it was near impossible to create cameras with functions to work so well in space, but in 1969, two men invented the CCD sensors. The charge-coupled device revolutionized image quality and was used for the first time in 1984 on the Vega mission to Halley’s comet.

How Does a Satellite Camera Work Using CCD?

In simple terms, the combination of the circuit on the silicon material gives birth to light-sensitive elements known as pixels. These pixels absorb photons (light) in a precise pattern and convert them into a digital copy.

The CCD has been the sensor used for space exploration over the last three decades, but in recent times, the rise of CMOS sensors threatens the use of CCD. CMOS sensors (Complementary metal-oxide-semiconductor) are catching up with the CCD in recent years.

The primary advantage CMOS has over CCD is its high frame rate that is operated with little noise. CMOS is also cheaper to produce than CCD, which is appealing to many manufacturers. With many pulling back on CCD and developing more CMOS sensors.

Lastly, the question “how to use the satellite camera” is best answered by experts who dedicated more and more time to this emerging industry.

People and Space Exploration

The importance of space exploration for humans is immense. The advancement in the latest optic technology and sensor device has led to more improvement on the CMOS sensor.

A leading manufacturer, OnSemi, stopped producing CCD in 2019, and Sony will follow suit by 2025. Do you think it’s a wise decision to stop manufacturing CDD sensors? Let us know your answer below.